Easy Company for Grad School Project for Financial Statement Analysis

According to Gartner, organizations can suffer a financial loss of up to 15 million dollars for the poor quality of data.

As per McKinsey, 47% of organizations believe that data analytics has impacted the market in their respective industries.

According to Forbes, in 2012 only 12% of Fortune 1000 companies reported having a CDO (Chief Data Officer). This number grew to 67.9% as of 2018, and is only increasing from there. The rise in the number of CDO's is proof that more and more businesses are realizing the importance of adopting big data analytics.

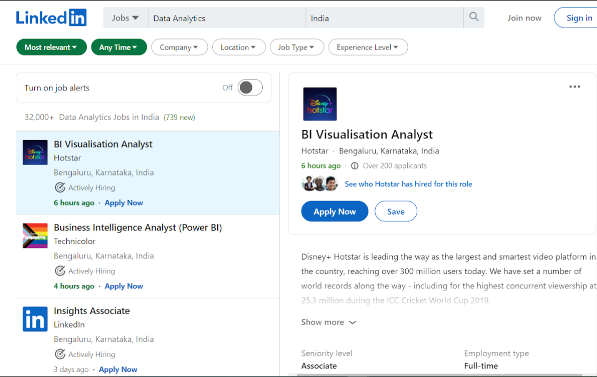

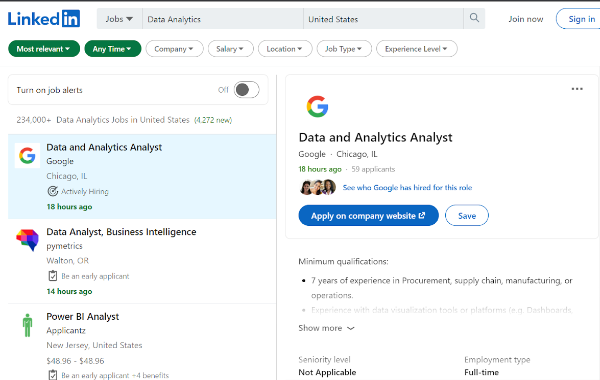

LinkedIn data as of June 2021 on data analytics jobs growth gives you clear proof of evidence on the demand for analytics professionals in the industry today -

Azure Stream Analytics for Real-Time Cab Service Monitoring

Downloadable solution code | Explanatory videos | Tech Support

Start Project

India has over 32,000 job openings in the data analytics field, while the United States has over 234,000 data analytics jobs to offer. More and more industries are now realising the importance of making use of data analytics and using the data available to them for their benefit. The amount of data to be processed is only expected to grow and larger amounts of data implies that more people will be required to handle processing of this data.

Table of Contents

-

- Skills Required for Data Analytics Jobs

- Why Should Students Work on Big Data Analytics Projects ?

- 20 Data Analytics Projects for Students

- Data Analytics Projects for Students in Python

- 1) Market Basket Analysis

- 2) Reducing Manufacturing Failures

- 3) Insurance Pricing Forecast

- 4) City Employee Salary Data Analysis

- 5) Topic Modelling using K-means clustering

- Data Analytics Projects for Students in R

- 6) Churn Prediction in Telecom

- 7) Predicting Wine Preferences of Customers using Wine Dataset

- 8) Identifying Product Bundles from Sales Data

- 9) Movie Review Sentiment Analysis

- 10) Store Sales Forecasting

- Big Data Analytics Projects for Students using Hadoop:

- 11) Data Analysis and Visualization using Apache Spark and Zeppelin

- 12) Apache Hive for Real-time Queries and Analytics

- 13) Building a Music Recommendation Engine

- 14) Airline Dataset Analysis

- 15) Analysis of Yelp Dataset Using Hive

- Data Analytics Projects for Grad Students using Spark

- 16) Build a Data Pipeline based on Messaging using PySpark and Hive:

- 17) Predicting Flight Delays

- 18) Event Data Analysis

- 19) Building a Job Portal using Twitter Data

- 20) Implementing Slowly Changing Dimensions in a Data Warehouse:

- Data Analytics Projects on GitHub

- 21) Analyzing CO2 Emissions

- 22) Data Analysis using Clustering

Skills Required for Data Analytics Jobs

With love for data and passion for number crunching, anyone can reap the rewards of a successful career in data analytics - be it students, professionals from other industries, or developers looking forward to making a career transition into data science and analytics. Students need not necessarily have a computer science or a math background to land a top big data job role. A perfect blend of technical and soft skills like excellent communication skills, storytelling, a keen attention to detail and a good ability to make logical and mathematical decisions will take you a long way in your data analytics career. Here is the list of key technical skills required for analytics job roles which can also be acquired by students or professionals from a non- technical background -

-

SQL: Structured Query Language is required to query data present in databases. A data analytics professional is required to constantly access data, either retrieve data from where it is stored or update it when required. Even data that has to be filtered, will have to be stored in an updated location.

-

Programming languages like R and Python: Python and R are two of the most popular analytics programming languages used for data analytics. Python and R provide many libraries making it convenient to process and manipulate data. A good command over one or more programming languages would not only increase the chances of getting a data analytics job, but would also help you negotiate for a better data analyst salary.

-

Data Visualization Skills: Data visualization is the ability to present one's findings through charts, graphs, or other visual layouts. To present the insights in a comprehensible and visually appealing format, visualization skills are vital for any kind of a data analytics job role.

-

Data Cleaning: To improve the data quality and filter the noisy, inaccurate, and irrelevant data for analysis, data cleaning is a key skill needed for all analytics job roles.

-

Microsoft Excel: A successful Excel spreadsheet helps to organize raw data into a more readable format. With more complex data, Excel allows customization of fields and functions that can make calculations based on the data in the excel spreadsheet.

-

Machine learning and Natural language processing skills: Processing of the data may require algorithms and techniques from the fields of machine learning and natural language processing to be applied.

Why Should Students Work on Big Data Analytics Projects ?

A good way to start preparing so that you can build up your resume and start applying for data analytics jobs is with some hands-on experience. That means actually working on data analytics projects. Here are a few reasons why you should work on data analytics projects:

-

Data analytics projects for grad students can help them learn big data analytics by doing instead of just gaining theoretical knowledge.

-

Data analytics projects for practice help one identify their strengths and weaknesses with various big data tools and technologies. You may feel that you are better able to understand and grasp some projects or some parts of a project more than others.

-

Exploring various big data projects gives a better idea of where your interests lie while working with different analytics tools.

-

It helps to build a job-winning data analytics portfolio and sets you apart from other candidates while applying for a job. Hands-on experience with projects on data analytics can build up your practical analytical skill set that is a must-have when applying for analytic job roles.

-

Working on projects boosts your confidence with respect to working in the field of data analytics and gives you a sense of accomplishment once you manage to complete a certain project.

20 Data Analytics Projects for Students

Here is a list of 20 big data analytics projects that you can start working on to build up your data analytics skill set. These big data analytics projects for grad students are categorized based on some key skills required for data analytics job roles -

Data Analytics Projects for Students in Python

Python is one of the most popular programming languages for data science. Python provides several frameworks such as NumPy and SciPy for data analytics. Some amount of experience working on Python projects can be very helpful to build up data analytics skills.

1) Market Basket Analysis

Market Basket Analysis is essentially a data mining technique to better understand customers and correspondingly increase sales. Here, purchasing patterns are observed in customers to identify various combinations of products that are purchased together. The idea here is that if a customer purchases a particular item or a group of items (let's call it Product 'A'), then this increases the likelihood that the customer is also interested in buying another item or group of items (Product 'B'), implying that interest in A could mean an interest in B as well. Market Basket Analysis can be beneficial to increase sales by aiding targeted promotions, recommendations and cross-selling. In fact, even menus can be written keeping in mind the patterns noticed from market basket analysis. In grocery stores, the aisles and goods can be arranged taking into account items that are frequently bought together. An approach to performing customer market basket analysis can be done using Apriori and Fp Growth data mining algorithms.

Access Solution to Market Basket Analysis using Apriori and Fp Growth Algorithms

2) Reducing Manufacturing Failures

Product-based companies have the task of ensuring that their products are top of the notch. In order to do this, the components of these products and the final products have to be thoroughly tested for the possibility of defects and to ensure optimum performance. Testing is a very complex process which costs a company a fair amount of time, energy and money. It is such a vital process because companies cannot afford to compromise on product quality. However, by applying analytics to the various parts of the manufacturing process, we can aim to reduce the time and cost required for testing while ensuring that the product quality still remains upto the mark. By using the production line dataset, the goal of this data analytics python project is to predict internal failures by making use of data that contains information on tests and measurements obtained for each component. By identifying patterns from previous test scenarios, test cases going forward can be optimized.

Access solution to the data analytics project for students in python to optimize the production line performance for Bosch.

3) Insurance Pricing Forecast

Insurance comes in many forms-motor, property, travel, health to name a few. Insurance companies collect small amounts of money, known as premium, from an individual or from an organisation over regular intervals of time and use this money to pay for the individual or organization for any financial loss which is covered by the insurance. Insurance companies have to decide the amount of premium to charge their investors in such a manner to ensure that they are not in a loss when a financial amount has to be paid to the insured person(s). In the case of health insurance, the insurance company has to spend a lot of time and effort in evaluating health care costs and using that to determine the premium that they have to charge. Similarly, insurance companies in other sectors too have to closely monitor the costs which they have promised to cover. Alternately, if insurance companies end up overpricing the amount they charge their investors, then it is only natural that these investors will prefer to be insured by their competitors. Forecasting insurance pricing is an interesting big data project solution that uses regression analysis to determine the optimal rates for insurance premiums.

Access Solution to Insurance Pricing Forecast

4) City Employee Salary Data Analysis

One of the best ways to understand a city and how it works is to analyze the salaries of the various employees residing in the city. This gives an evaluation criteria for the government as well to understand how the citizens are compensated. A closer look at employees and their salaries shows the kind of jobs that are in demand, the kind of jobs which offer the highest packages and can help give job aspirants an idea of which fields have more to offer in terms of monetary benefits. An analysis of the salaries of employees in a city also gives an idea of how demographics within the city affects job positions and incomes.

Visualization of the salaries of employees in a city can be done using a scatter plot to understand the spread. Grouping of different columns and plotting density plots will give a more distinct idea of the distribution. Boxplot and whisker plot can be used to visualize the outliers. Quantile plots can be used to check the normality of the data.

Access Solution to Analysis of San Francisco's Employees' Salaries

5) Topic Modelling using K-means clustering

Topic modelling is a text-mining technique that is used to determine the context of a document in a few words or in other words, to find the topics covered in a particular document. Topic modelling is used to identify hidden semantic patterns in a body of text and use it for better decision-making. Topic modelling finds applications in organization of large blocks of textual data, information retrieval from unstructured data and for data clustering. For e-commerce websites, topic modelling can be used to group customer reviews and identify common issues faced by consumers. Topic modelling can also be used to classify large datasets of emails. This data analytics project uses K-means clustering , an unsupervised machine learning technique that can be used in the case of topic modelling to organise several bodies of text into groups based on the topic discussed in the body of the text.

Access Solution to Text Modelling using K-means Clustering

Data Analytics Projects for Students in R

Like Python, R too is a very popular programming language among data analysts. It provides multiple tools to make it easier to work with data analysis and is highly attractive in the data analytics job market.

6) Churn Prediction in Telecom

The success of businesses is dependent on new customers, but it is extremely important to retain existing customers to cut down on the customer acquisition cost. For a company, customer churn occurs when a customer decides to stop using the products or services offered by a company. Like other industries, the telecom industry too, requires that existing customers continue their relationship with the company. Customer churn in the telecom industry can be caused due to various reasons including call drops, network unavailability, service quality, lower rates from competitors. By analyzing the data from the customers, the probability of customer churn for individual customers can be calculated. A close look at the findings from this analysis can give telecom companies a warning sign as to the areas that need improvement to improve customer satisfaction. This can help telecom companies serve their customers in a better manner and as a result reduce the customer churn and improve profitability. Machine learning techniques can be applied to perform customer churn prediction.

Access Solution to Customer Churn Prediction using Telecom Dataset

7) Predicting Wine Preferences of Customers using Wine Dataset

According to Research and Markets, the wine industry was valued at $157.6 billion in 2018 and is estimated to reach $201.2 billion in 2025. Wine tasting, too, is a very popular hobby among many adults. Wine is known to have several health benefits as it contains antioxidants. But, like most other products, customers would naturally prefer to have wine that is of superior quality. Wine is placed into different categories based on smell, flavour and taste. Furthermore, there are various factors that affect the quality of wine. A careful analysis of the dataset along with the various factors associated with each wine reveals some repeated patterns that can be associated with higher quality wines. This can be beneficial to companies manufacturing wine to ensure that they maintain top-notch quality of wine and keep their customers content. Analysis of the factors that affect wine quality will involve data munging, where raw data will be transformed from one format to another. The application of various plotting techniques and regression techniques will help to identify some factors that influence wine quality.

Access Solution to Assessing Quality of Red Wine

8) Identifying Product Bundles from Sales Data

Similar to the market basket analysis approach, the goal of this data analytics project using R is to identify products that are frequently purchased together by customers. Accordingly, these bundles can be sold together or placed together as a marketing strategy. Time series clustering machine learning techniques can be used to identify product bundles. Time series clustering is a data mining technique which involves grouping of data points based on certain similarities. Observing several customers who purchase certain items together and finding similarities in these patterns can help stores reach targeted audiences with the right kind of promotion strategies.

Access Solution to Identifying Product Bundles from Sales Data

9) Movie Review Sentiment Analysis

Sentiment Analysis involves classifying a remark as positive, negative or neutral. Broader classifications can also be done such as "strongly agree, agree, neutral, disagree, strongly disagree". By analyzing reviews written by individuals who have watched a particular movie, it is possible to get better movie recommendations. With the popularity of social media, it is very easy to express one's sentiments towards a particular movie. There are many repercussions to these reviews that are expressed. Sentiment analysis of the reviews can be used to identify interest patterns in the audience, which can accordingly be used to generate recommendations. The use of sarcasm, ambiguity in language use, negations and multipolarity add to the challenges associated with categorizing the reviews based on sentiments. Through this project, it will be possible to understand more about the concept of speech tagging, the difference between stemming and lemmatization and applications of sparse matrices. Application of Naive Bayes model and SVM (Support Vector Machine) can be used for the training model and accordingly making predictions.

Access Solution to Movie Review Sentiment Analysis

10) Store Sales Forecasting

Sales for a certain product vary based on several factors. Some of these factors include seasonality, locality, reduced competition, advertisements and promotions. For good inventory management, it is very important for a store to have a predicted estimate on sales for various products on sale. Using machine learning, it is possible to find patterns which affect the sales in a particular store from the past data. This past data will be used to train the model. Once the patterns are identified, a store can get a better understanding of which of its products are in demand. This allows for better inventory management, wherein the store can ensure that the demands of its customers can be met by stocking up with sufficient products to meet the needs while at the same time not overstocking, so that wastage is minimized and profits are maximized. For seasons when the sales are expected to be higher, a store can hire more staff to cater to these demands. Sales Forecasting also helps in giving a better idea of a store's growth.

Here is an example of Sales Forecasting for Walmart stores.

Big Data Analytics Projects for Students using Hadoop:

Working on data analytics projects is an excellent way to gain a better understanding of the popular big data tools like hadoop , spark, kafka, kylin, and others. These analytic project ideas will help you master fundamental big data skills in Hadoop and other related big data technologies. These Hadoop projects for practice will let you learn about the various components of the Hadoop ecosystem and will also help you gain more insight on how these Hadoop components can be integrated with other big data tools in the real-world.

Check Out Top SQL Projects to Have on Your Portfolio

11) Data Analysis and Visualization using Apache Spark and Zeppelin

Apache Zeppelin is a browser-based notebook that allows interactive analysis of data among multiple professionals who are involved in the data analysis. A notebook in this case, is a code execution environment that allows the creation and sharing of code. Zeppelin allows individuals or teams to engage in data visualization on a collaborative basis. Apache Zeppelin provides built-in Apache Spark integration. Apache Spark is an open source data processing engine used for large datasets. It is built on Hadoop MapReduce and extends this MapReduce model to use it more efficiently for various other forms of computation including interactive queries and stream processing. This big data project can help build familiarity with these two tools and how they can be used for data analysis and visualization.

Access Solution to Data Analysis and Visualization using Apache Spark and Zeppelin

12) Apache Hive for Real-time Queries and Analytics

Apache Hive is a data warehouse software project built on top of Apache Hadoop. Hive is an SQL-like interface which allows one to query data that is present in the Hadoop ecosystem for the purpose of analysis. This project is a good way to get a know-how of how Apache Hive can be used for real-time processing. In real-world applications, it is often the case that data has to be retrieved in real-time from the source. The processing is done as soon as the data is inputted. For example, Google maps processes traffic data in real-time. As soon as it receives information from the source, the data is output onto its application. Real-time data processing is required for applications where users rely on the most up-to-date information for reliability.

Access Solution to Using Apache Hive for Real-time Queries and Analytics

13) Building a Music Recommendation Engine

A recommendation engine is built using machine learning techniques based on the principle that patterns can be found among individuals who engage in a particular activity, in this case, listening to music. It is a data filtering technique that is used to recommend music to an individual based on consumer behaviour data. This consumer behavior data may find patterns based on several factors including geographic location, age group, sex and trends. Music preferences may also vary from individual to individual of the same age group living in similar localities. In such cases, it is important to identify patterns in the type of music interests unique to a particular individual by observing songs that an individual listens to and the songs that result in a positive feedback from that particular consumer. By identifying patterns in interest particular to specific individuals, music recommendations can be generated. In this manner, consumers are kept satisfied with the type of music that they listen to, and happy customers result in flourishing businesses.

Access Solution to Music Recommendation Engine

14) Airline Dataset Analysis

From preflight to post-flight operations, data analytics plays a vital role in the aviation industry.Analysis of airline data can help customize travel experience to make it more customer-oriented. A few examples of data analytics in aviation include - identifying the demand for flights on popular routes based on seasonality, identifying trends and patterns in flight delays. Even technical issues faced by flights can be monitored more closely to see if there are trends wherein older flights may be prone to more problems. A challenge in analysis of the airline dataset is the large number of variables and data items that will have to be analysed for each of the fields where improvements have to be made.

Access Solution to Analysis of Airline Dataset

15) Analysis of Yelp Dataset Using Hive

According to Wikipedia, in 2020, Yelp had 43 million unique visitors to its desktop webpages and 52 million unique visitors to its mobile sites. This should be an indication of the sheer size of data available for analysis. Yelp is a commonly used online directory that publishes crowd-sourced reviews about businesses in a particular locality. The idea of this project is to get comfortable using Apache Hadoop and Hive for handling large datasets and applying the data engineering principles which involve processing, storage and retrieval on the Yelp dataset. Analysis of Yelp dataset can be beneficial to businesses to get a better understanding of their customers' responses to not only their business but also to their competitors.

Access Solution to Analysis of Yelp Dataset

Data Analytics Projects for Grad Students using Spark

Spark projects are a good way for students to gain a thorough understanding of the various components which are a part of the Spark ecosystem, including -Spark SQL, Spark Streaming, Spark MLlib, Spark GraphX. These spark projects for practice will help you understand the real-time applications of Apache Spark in the industry.

16) Build a Data Pipeline based on Messaging using PySpark and Hive:

A data pipeline is a system that ingests raw data from a source and moves this data to a destination where it can be stored or processed further for analysis. The pipeline may also require the data to be filtered or cleaned for various purposes. Data pipelines are beneficial if the data is required for multiple purposes. The stored data can be accessed and used for its necessary purposes. Here the data will be streamed real-time from an external API. This data has to be parsed and stored for further analysis. The data will then be sent to Kafka for processing using PySpark. Once the data is processed, it will again be picked up by Spark and stored in the new processed format in HDFS (Hadoop Distributed File System). A Hive external table is then created on top of HDFS which will allow the cleaned, processed data to be deployed. This project will help in understanding how real-time streaming data has to be parsed and stored. This big data project will help you understand end-to-end workflow on how to create and manage a data pipeline.

Access Solution to building a Data Pipeline based on Messaging using PySpark and Hive

17) Predicting Flight Delays

Flight delays impact airlines, passengers and air traffic. Flight delays are not just inconvenient, but are also very bad for business. Accurate prediction of flight delays would be a huge boon to the airline industry. Building accurate models to predict flight delays becomes challenging due to the complexity involved in the air transportation system and the large chunks of flight data available. OLAP cube design can help you jump-start on how to go about building a flight delay prediction model. An OLAP (online analytical processing) cube is a multi-dimensional array of data that allows fast analysis of data based on the multiple dimensions that are associated with a business problem.

Access Solution to Predicting Flight Delays

18) Event Data Analysis

Event data analysis is the process of applying business logic to process and analyse data that is streamed at an event-level to produce data that is more suitable for querying. Event data refers to the actions performed by various entities. In event data, a particular data point can be associated with multiple entities, which all contribute to influencing the occurrence of a particular event. With event data, it is important to remember that the events can be dynamic, continuously influenced by outside events and non-linear, which means that the events will not occur over regular intervals of time. This is what makes event data analysis a challenging task. The goal of event data analysis is to enable the system to identify critical events based on patterns observed from previous data occurrences. Opportunities and threats can be anticipated beforehand to respond to them either before they occur. If prediction is not possible, then event data processing can also ensure that there are quick responses immediately after an event has occurred when such a situation arises.

Here is an example of an analysis done of real time streaming event data from New York City accidents dataset.

19) Building a Job Portal using Twitter Data

The social networking site Twitter has a whopping 187 million monetizable daily active users (mDAUs) as of 2020. This number is expected to grow by 2.4% in 2021. On Twitter, users can post and interact with each other through messages, referred to as 'tweets'. The goal of this big data project is to stream data from Twitter to locate recently published jobs, process them and make them easier to find for other users who are actively searching for jobs via easy to use job search APIs. A notification feature can also be added to users who have subscribed to job search notifications. The challenge here is to process large amounts of data which will be streamed on Twitter in real-time, filter the tweets that are related to jobs, a further filter has to be applied for domain-specific jobs and then update the job portal as quickly as possible so that the job portal remains upto date. Similarly, once a job listing is filled, the job portal has to be updated once more to indicate that that particular job role is no longer vacant.

Access Solution to Building IT Job Portal using Twitter Data

20) Implementing Slowly Changing Dimensions in a Data Warehouse:

Slowly Changing Dimensions (SCD) are data entities in a data warehouse which remain fairly static over time and change very slowly rather than at regular or frequent time intervals. A data warehouse is a data management system which is built to support business intelligence activities, primarily data analytics. They are central repositories of data integrated from various sources. Due to the presence of this data in a central location, data warehouses make it possible to arrive at faster decisions with respect to the data. SCDs in a data warehouse very rarely change, but when they do, it is important that there is a good system in place that ensures these changes are captured and reflected throughout the data warehouse wherever necessary. There are several techniques in which the change in this data can be captured. Choosing the right technique is the key to maintaining data integrity. For example, in some cases it is okay to override a data value, but in some cases it is important to have a reference to the old value as well.

Here is an approach to implementing Slowly Changing Dimensions in a Data Warehouse.

Data Analytics Projects on GitHub

If you are someone who is looking for data analytics projects for final year students, check out this section for data analytics projects on Github.

21) Analyzing CO2 Emissions

Is climate change real? The world stands divided on this topic, primarily into two groups. One group is in favor and believes global warming is the cause behind it, while the other believes climate change is a hoax. The former group blames the emission of greenhouse gases like CO2 for the rise in global temperature. It is difficult to understand how fair it is to blame those emissions but can a data analyst help? Yes, explore the project idea to know how.

Project Idea: Download the sample dataset 'fuelconsumption.csv' and store it into a Pandas dataframe. Analyze the dataset using various functions for a dataframe object like df.head(), df.summary(), etc. Analyze the data using a logistic regression model and plot various graphs using the matplotlib library to draw various conclusions. As a challenge, try to estimate future values by splitting the dataset into test and training subsets.

Repository Link: Data Analytics Project on CO2 Emissions by arjunmann73

We hope that you found some innovative data analytics project ideas interesting enough to learn big data and practice. These are projects which would be very beneficial for students to take up as their final semester projects. In addition, if you have begun applying for summer internships too, we are sure these big data projects can be of great help to you in building up your data analytics portfolio and landing a top data gig. If you are looking to explore a few more interesting data analytics project ideas, check out these big data and data science projects. We add new end-to-end real world analytics and data science projects every month that come with documentation, explainable videos, downloadable dataset for the project and reusable code.

22) Data Analysis using Clustering

One of the most popular machine learning algorithms that data scientists use is the K-Means clustering algorithm. It groups together data points that have similar characteristics. In Machine learning, it is used to classify a new data point based on its characteristics. But, this algorithm can also be used for analyzing which entities in a dataset possess similar features.

Project Idea: Download the sample dataset of private and public universities. Use Python's Pandas dataframe functions like df.info(), df.describe(), etc. to analyze the data. Prepare a profiling report for the data using the pandas_profiling library and list all the insightful conclusions about all the variables. Use snsplot and matplotlib library to perform exploratory data analysis. Use the sklearn library to classify the data into clusters having similar properties.

Repository Link: Data-Analytics-Project K-Means Clustering by thealongsider

Source: https://www.projectpro.io/article/big-data-analytics-projects-for-students-/436

0 Response to "Easy Company for Grad School Project for Financial Statement Analysis"

إرسال تعليق